Navigating Compliance and Security with ChatGPT in a Regulated Environment

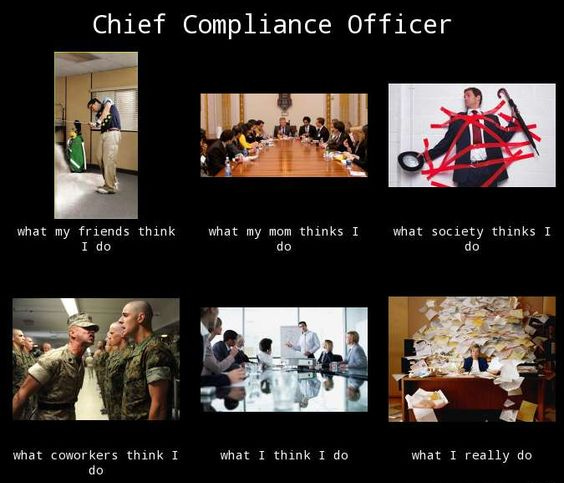

A Conversation with Brian Weiss, former Chief Compliance Officer of DBRS Morningstar

Introduction

In my journey across the financial landscape, I've had the pleasure of crossing paths with a number of brilliant minds, and one such individual is Brian Weiss. For a decade, Brian and I worked closely together at DBRS Morningstar, navigating the complex maze at the intersection of regulatory compliance, technology, and the ever-evolving world of finance.

Brian, with his vast knowledge and sharp acumen, served as the Chief Compliance Officer at DBRS Morningstar. He proved instrumental in leveraging technology to meet stringent regulatory requirements, while also ensuring that our operations remained compliant and efficient. Today, Brian continues to be a formidable figure in the regulatory and compliance field.

In this post, we are fortunate to have Brian join us for an enlightening discussion on a crucial topic: the application of AI in a regulated environment, with a focus on ChatGPT. Brian will share insights on:

The evolving landscape of AI and compliance.

Key considerations when integrating AI tools into business operations within regulated environments.

The challenges of aligning AI with regulatory requirements, and strategies to overcome them.

Ensuring data security when using AI tools and understanding potential risks.

Join us as we delve into these intricate subjects, unearthing the crucial balance between AI integration, compliance, and security within regulated environments.

Understanding Regulated Environments

Regulated environments, like those in the financial industry, are characterized by a complex web of laws, regulations, and guidelines designed to maintain integrity and protect consumers. A multinational company will have to comply with regulations in different countries. Sometimes one country’s regulations are more stringent than the regulations of another country. As technology evolves, so do these regulations, and it's crucial to understand the implications of introducing AI into such an environment. Regulators around the world are grappling with how to manage these new technologies, and the landscape is continually shifting.

At its core, compliance in a regulated environment means adhering to all relevant laws, regulations, and industry standards. It's about more than just avoiding penalties or legal issues; it's about fostering trust with customers, stakeholders, and the wider public. By demonstrating a commitment to compliance, organizations can differentiate themselves in a crowded market and position themselves for long-term success. Compliance makes good business sense. It is now common for regulated companies to require their vendors and suppliers to meet the standards of their regulatory obligations, even if the vendor or supplier is not themselves regulated.

Compliance and Governance When Using ChatGPT

When it comes to implementing AI tools like ChatGPT in a regulated environment, compliance and governance are paramount. Compliance entails ensuring that the use of AI doesn't infringe on any regulatory requirements, while governance involves creating policies, procedures, systems and processes that control how AI tools are used within the organization.

In the context of ChatGPT, organizations should consider several factors. For instance, they need to understand the model's capabilities and limitations, including potential biases in its outputs. Ensuring transparency in how the model is used and sharing this information with stakeholders is also critical.

Moreover, organizations need to implement robust governance structures, including a clear accountability framework for decisions made by or with the help of the AI. Regular auditing of the model's performance is also crucial to ensure it's working as intended and not causing unforeseen issues.

Data Security with ChatGPT

Data security is a crucial concern when using any AI tool, including ChatGPT. Financial institutions handle vast amounts of sensitive data, and it's their responsibility to protect this information from unauthorized access or breaches. When using AI tools, this responsibility extends to the data that the model has access to and how it's used.

With ChatGPT, organizations should consider data security in two main areas: data in transit and data at rest. Data in transit refers to data being sent to the model for processing, while data at rest refers to data stored on the model's servers.

In both cases, robust encryption methods should be used to protect the data. Additionally, access controls and secure authentication methods can help ensure that only authorized individuals can interact with the model or access the data.

Organizations should also pay close attention to the terms of service provided by the AI vendor. They should ensure that these terms align with their data security policies and that they are comfortable with how the vendor handles and protects their data.

Implementing AI tools in a regulated environment comes with unique challenges, but with careful planning and a commitment to best practices, organizations can navigate these challenges successfully.

A Conversation with Brian Weiss

As we shift gears into the heart of our discussion, we're incredibly fortunate to have Brian Weiss share his insights and expertise with us. With a rich background in compliance and governance, Brian's perspectives on the use of AI tools like ChatGPT in a regulated environment are invaluable. As someone who has faced the challenges and explored the opportunities presented by this intersection of technology and regulation, Brian's experiences offer us a unique window into the world of AI governance in the financial sector.

In the following Q&A, we delve into his thoughts and reflections, providing a rare look at the frontlines of AI compliance.

How have you seen the intersection of AI and compliance evolve over the course of your career, and where do you see it heading in the future?It is only since applications like ChatGPT became widely available to individuals that AI’s use has appeared on the radar for compliance officers. While regulations have not yet – key word is yet – been adopted about the use of AI, regulators are already starting to ask questions of companies about how AI is used during their routine regulatory examinations. As such, compliance officers need to urgently engage the business they support and turn their collective minds to determining how it wants use – or not use AI – before a regulator starts asking questions.

The adoption of regulations will bring some clarity to companies and compliance officers in this space but that may take some time to happen. For example, in the U.S. the President and Congress, several federal regulators and some states have issued guidelines on the use of AI and have advocated for regulation of AI. In addition, many countries around the world have followed suit with the goal of regulating AI.

What are the key compliance considerations when integrating AI tools like ChatGPT into business operations in a regulated environment?Once an organization determines if it wants to use it, the compliance officer needs to evaluate which current regulations and existing policies and procedures govern the use of AI.

The governance of the use of AI needs to draw from many of the control functions of a company – risk, compliance, information security, data privacy, internal audit and business management. Compliance officers should conduct a review of all existing policies, procedures, business practices and processes to determine which if any are impacted by the use of AI. These should be updated as necessary. A few compliance considerations are:

Access to AI platform - Controls should be reviewed to Restrict access to the AI platform to authorized users and to restrict access to information entered to the AI platform, and any information produced by the AI platform in accordance with established policies and procedures.

Record retention – Controls should be reviewed to so that information generated by using AI are retained in accordance with established policies and procedures.

AI Model Governance – Controls will need to be reviewed to govern how and when the AI model is changed or updated. The policies and procedures should include – the timeframe in which the AI model is reviewed, the testing required testing to be completed prior to an update, the approval process required before the updated model is available for use.

Existing regulations – Regulations that already exist may cover the use of AI. For example, the European Securities and Markets Authority (“ESMA”) has issued guidelines on the use of Cloud Sourcing Providers. If a company operates in the EU and is subject to oversight by ESMA, the compliance officer should help the company determine if the use of AI falls under the guidelines for Cloud Sourcing Providers. If so, the established policies and procedures should be followed.

Monitoring and Testing – The monitoring and testing conducted by the first and second lines of defense need to be updated to reflect any new or updated policies and procedures and practices established due to the usage of AI.

Building on those thoughts on AI Model Governance, what about AI can sometimes be a "black box," providing results without clear explanations of how they were derived. How do you approach the issue of transparency and explainability with generative AI tools? How will teams ensure transparency with investors, regulators and other stakeholders?If a company is using AI Models, they should expect the regulators to be asking questions. The company will need to demonstrate how it gained comfort the model works as intended, produces consistent results. Also, the company will need to understand and explain the data set used by the AI Model. For example what is the date range of information utilized by AI Model? From what country/countries does the AI Model include data? A company is going to need to understand these and many other factors of the AI Model.

Let me use an analogy. I like to think of an AI Model as an ingredient in a recipe. Imagine if Betty Crocker changed the provider off flour used in its cake mix. Betty Crocker will need to explain to regulators (in this example that would be the Food & Drug Administration) - how it knows the new flour is safe? Did Betty Crocker follow its policies and procedures for selecting the new flour? Did it review the safety record of the new vendor? On a commercial front, consumers are finicky, does the use of the new flour change the finished product from a taste or consistency of the finished product? Did Betty Croker conduct taste tests with consumers? Those of us over a certain age remember Coca-Cola's debacle with New Coke. If you are not familiar with this I encourage you to google it!

How can organizations ensure data security when using AI tools like ChatGPT, and what are the potential risks they should be aware of?Before ChatGPT and other AI platforms were widely available to individuals in November 2022, Cybersecurity and data security was most likely a top risk facing many companies and public institutions. As I mentioned above, a company is going to want to make sure only authorized users are able to access the AI tool, information generated is only available to appropriate groups. In addition, data privacy professionals will have to determine what data can be used as inputs to the AI platform. For example, can an authorized user enter Personally Identifiable Information into the platform? Policies and procedures should be updated to reflect what data can be used in the AI platform and the controls need to be established to restrict the data available to be used accordingly.

Last question! How do you handle the ethical considerations that come with using AI tools, such as potential bias in the AI's output or decisions based on the data it was trained on?A company should have clear policies, procedures and controls in place for the use of AI. For example, When can it be used? Which business groups can use it? Does using an AI Model require disclosure and or attribution in a report or article? What reviews and approvals are required of the output of the model before the results the finished article or report is published?

In addition the company should consider the training provided to use of the model. Users of the model should be trained on the data set used by the model, any biases the model may have in producing results, and how to correct for any biases. This is why the testing of the AI Model is so important.

Case Study: ChatGPT in a Regulated Environment

To bring our discussion into a practical context, let's explore a hypothetical scenario where a financial institution decides to integrate ChatGPT into their operations. We'll look at how the compliance, governance, and data security considerations we've discussed with Brian come into play, with a particular focus on the handling of Material Non-Public Information (MNPI).

In our scenario, the institution wants to use ChatGPT to enhance their customer service operations, automating responses to customer queries, and providing real-time assistance to their human customer service team. The potential benefits are significant - reduced response times, increased customer satisfaction, and more efficient use of resources.

However, the institution operates in a highly regulated environment. It's crucial to ensure that the use of ChatGPT complies with all relevant regulations and industry best practices. For example, they would need to:

Assess and Mitigate Risks: The institution would need to conduct a comprehensive risk assessment, considering potential compliance issues, data privacy risks, and the risk of bias or inaccuracy in the AI's responses. A crucial aspect of this would be ensuring that MNPI is not inadvertently shared with the AI platform, as this could have significant legal and reputational consequences.

Review Contracts and Terms of Use: Before implementing any AI tool, the institution should carefully review the provider's terms of use and other contractual obligations. This is particularly important when it comes to data handling and privacy provisions. The institution would need to confirm that the use of the AI tool does not violate these terms or any applicable laws or regulations.

Implement Governance Mechanisms: To maintain control over the AI's operations, the institution would need to put in place robust governance mechanisms. This could include regular audits of the AI's performance and the establishment of clear accountability structures.

Ensure Data Security: Given the sensitive nature of the data the AI would be handling, stringent data security measures would need to be in place. This could include encryption, access controls, and regular security testing.

This case study underscores the need for a thoughtful and thorough approach when integrating AI tools into a regulated environment. It's not just about leveraging the benefits of AI - it's about doing so in a way that respects regulatory requirements, protects customers, and safeguards MNPI.

Conclusion

As we reach the end of this enlightening discussion with Brian Weiss, it's clear that the intersection of AI, compliance, and data security in a regulated environment is both a challenge and an opportunity. We've only just scratched the surface of this complex topic, and there's so much more to explore.

We invite you to continue the conversation by leaving your thoughts, questions, or experiences in the comments section below. If you've found this discussion valuable, please share it with your colleagues and networks who might also benefit.

Remember, navigating the future of finance and technology is a journey we're all on together. By sharing knowledge and fostering dialogue, we can better understand the landscape and help shape it for the better.

Stay tuned to Prompts.Finance for more deep dives and expert insights into the world of AI and finance. And if you haven't already, don't forget to subscribe so you never miss a post.

Keep prompting!